容器相关

非root用户Docker与K8S

Containerd安装

Containerd常用命令

Docker

镜像创建

国内镜像仓库

容器创建(Dockerfile)

容器系统

docker配置

docker数据管理

docker网络管理

docker容器自启动

docker镜像加速

docker问题

搭建Portainer可视化界面

Docker Swarm

Swarm搭建Docker集群

Docker Compose

Docker Compose命令

Docker Compose模板

Docker Machine

Kubernetes常用命令

k8s部署(kubeadmin)

k8s高可用部署

MiniKube

k8s1.24部署(containerd)

k8s1.24部署(docker)

部署 Dashboard

Kuboard K8S管理台

k8s权限管理

k8s网络插件

私有仓密码镜像拉取

k8s集群管理

POD--基本单位

Pod模板

Pod生命周期

Pod健康检查

初始化容器(initContainer)

Deployment--Pod的管理

Deployment模板

Deployment升级与回滚

DaemonSet控制器

StatefulSet控制器(有状态)

JOB与CRONJOB

Service--发布服务

ingress-traefix

ingress-nginx

MetalLB

存储与配置

持久存储卷

配置存储卷

资源管理

标签、选择器与注解

资源预留

调度管理

自动扩容

Proxy API与API Server

Helm--K8S的包管理器

helm常用命令

自定义Chart

私有chart仓库

helm dashboard

K8S证书过期

K8S问题解决

Harbor安装

Harbor操作

Harbor问题

Harbor升级

Docker Registry安装

Docker Registry鉴权

Registry用Nginx代理SSL及鉴权

Docker Registry问题

Istio 服务网络

常用示例

Gateway【服务网关】

kiali 可视化页面

开启HTTPS

linkerd 服务网络

本文档使用MrDoc发布

返回首页

-

+

K8S问题解决

2020年3月3日 11:07

admin

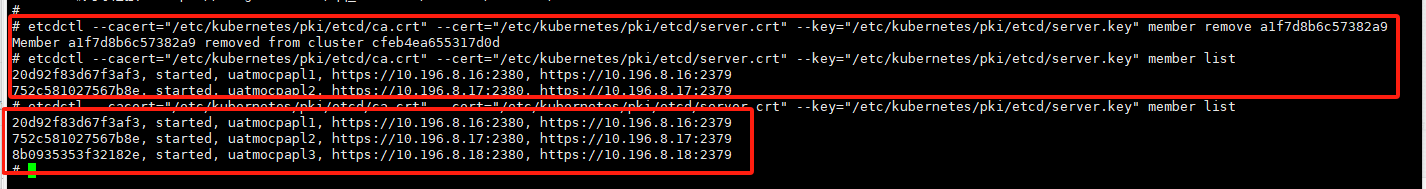

#高可用新master加不进集群 ####原来master节点故障,无法恢复,使用新的虚拟机master集群加入集群 --- ####解决:需要在正常的etcd集群里,把旧的故障节点etcd信息remove掉  --- #Nacos漏洞: https://help.aliyun.com/zh/mse/product-overview/nacos-security-risk-description-about-nacos-default-token-secret-key-risk-description --- ##安装问题 ###错误: >####error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to decode cluster configuration data: no kind "ClusterConfiguration" is registered for version "kubeadm.k8s.io/v1beta2" ###解决: >执行 join 的 kubeadm 版本与执行 init 的 kubeadm 版本不匹配,升级 kubeadm 版本 ###错误: >####node加入master时: >error execution phase kubelet-start: error uploading crisocket: timed out waiting for the condition ###解决: swapoff -a # will turn off the swap kubeadm reset systemctl daemon-reload systemctl restart kubelet iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X ###问题一: >####Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes") 解决: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config ##错误: >####failed to update node lease, error: Operation cannot be fulfilled 原因是没有办法对加入节点进行操作,node节点的hostname与master节点的hostname相同 解决: node节点上修改hostname hostname work1 #重启kubeadm kubeadm reset #重新加入 kubeadm join <masterIP>:6443 --token <TOKEN> --discovery-token-ca-cert-hash <discovery-token-ca-cert-hash> ##错误: >####failed to set bridge addr: "cni0" already has an IP address different from 10.244.1.1/24 将这个错误的网卡删除掉,之后会自动重建。 下面我们删除错误的cni0,然后让它自己重建,操作过程如下: sudo ifconfig cni0 down sudo ip link delete cni0 ##错误: >####k8s dns主机名无法正常解析 coredns服务一直处于 CrashLoopBackOff状态 vim /etc/resolv.conf #将nameserver临时修改为114.114.114.114 ,这只是临时办法 根本原因及永久办法请参考 #解决ubuntu系统 dns覆盖写入127.0.0.53的问题 kubectl edit deployment coredns -n kube-system #将replicates改为0,从而停止已经启动的coredns pod kubectl edit deployment coredns -n kube-system #再将replicates改为2,触发coredns重新读取系统配置 kubectl get pods -n kube-system #检查服务状态为Running ##pod跨节点网络不通,flannel无效 systemctl stop kubelet systemctl stop docker iptables --flush iptables -tnat --flush systemctl restart kubelet systemctl restart docker --- ##pod网络不通 ####kubernetes集群节点多网卡,calico/flannel组件如何指定网卡 --- ####1、calico如果有节点是多网卡,所以需要在deploy的env指定内网网卡 --- spec: containers: - env: - name: DATASTORE_TYPE value: kubernetes - name: IP_AUTODETECTION_METHOD # DaemonSet中添加该环境变量 value: "interface=ens33,eth0" # 指定内网网卡 - name: WAIT_FOR_DATASTORE value: "true" --- ####2、flannel如果有节点是多网卡,在deploy的args中指定网卡 containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.10.0-amd64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr - --iface=ens33 - --iface=eth0 --- ##运行kubectl tab时出现以下报错 [root@master bin]# kubectl c-bash: _get_comp_words_by_ref: command not found ##解决方法: 1.安装bash-completion [root@master bin]# yum install bash-completion -y 2.执行bash_completion [root@master bin]# source /usr/share/bash-completion/bash_completion 3.重新加载kubectl completion [root@master bin]# source <(kubectl completion bash)

分享到: