开源工具集

OpenVPN

IPSecVPN

ELK日志分析平台

elasticsearch

kibana

logstash

filebeat

Loki日志分析平台

蓝鲸

麦聪DaaS

SQLynx

Spug(运维平台)

JumpServer(堡垒机)

DataEase(数据分析)

1Panel(运维管理面板)

MeterSphere(持续测试)

Syncthing同步神器

matomo(网站访问统计系统)

Yapi接口管理平台

Sentry前端监控平台

Frp内网穿透

Frp使用示例

FileBrowser轻量文件管理

code-server在线VSCODE

Swagger UI可视化API

GoReplay流量重现

GitLab代码仓库

GOGS(Git服务器)

Confluence(知识管理协作)

RouterOS路由器(MikroTik)

go-fastdfs(fileserver)

本文档使用MrDoc发布

返回首页

-

+

logstash

2021年4月13日 14:50

admin

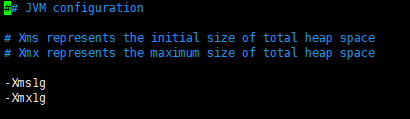

##注意事项:(需先安装java1.8) yum install -y java-1.8.0 ##下载地址 https://www.elastic.co/cn/downloads/logstash ##安装 wget https://artifacts.elastic.co/downloads/logstash/logstash-7.15.1-x86_64.rpm rpm -iUvh logstash-7.15.1-x86_64.rpm ##配置 ####/etc/logstash/jvm.options(内存)  #处理配置 --- ##输入(INPUT) ####读取文件 input { file { path => ["/var/log/message"] type => "system" start_position => "beginning" } } --- ####标准输入 input { stdin { add_field => {"key"=>"iivey"} tags => ["add1"] type => "system" } } --- ####读取syslog日志 input { syslog { port => "5514" } } --- ####读取TCP网络数据 input { tcp { port => "5514" } } nc 192.168.100.10 5514 < /var/log/secure --- ##编码 ####plain #plain是一个空的解析器,它可以让用户自己指定格式,即输入是什么格式,输出就是什么格式 input { stdin { codec => "plain" } } #或 output { stdout {} } --- ####json #如果发送给Logstash的数据内容为JSON格式,那可以在input字段加入codec=>json来进行解析,这样就可以根据具体内容生成字段,方便分析和存储。如果想让Logstash的输出为JSON格式,那可以在output字段加入codec=>json。 input { stdin { codec => "json" } } #或 output { stdout { codec => "json" } } --- ##过滤(FILTER) ####grok正则捕获 ####格式:%{语法:语义} 输入内容: 172.16.213.132 [07/Feb/2018:16:24:19 +0800] "GET / HTTP/1.1" 403 5039 ####%{IP:clientip}匹配结果: clientip: 172.16.213.132 ####%{HTTPDATE:timestamp}匹配结果: timestamp: 07/Feb/2018:16:24:19 +0800 ####%{QS:referrer}匹配结果: referrer: "GET / HTTP/1.1" --- ####date处理 filter { date { match => ["timestamp","dd/MM/yyyy:HH:mm:ss Z"] } } --- ##输出(OUTPUT) ####输出到标准输出 output { stdout { codec => rubydebug } } --- ####保存为文件 output { file { path => "/data/%{+yyyy-MM-dd}/%{host}_%{+HH}.log" } } --- ####输出到Elasticsearch output { elasticsearch { host => ["192.168.10.100","192.168.10.101"] index => "apache_log" manage_template => false template => "/Users/liuxg/data/apache_template.json" template_name => "apache_elastic_example" } } #manage_template:用来设置是否开启Logstash自动管理模板功能,如果设置为false将关闭,如果自定义了模板,那么应该将其设置为false。 #template_name:这个配置项用来设置在Elasticsearch中模板的名称。 --- ##示例: ####apache.conf input { file { path => "/Users/liuxg/data/multi-input/apache.log" start_position => "beginning" sincedb_path => "/dev/null" # ignore_older => 100000 type => "apache" } } filter { grok { match => { "message" => '%{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] "%{WORD:verb} %{DATA:request} HTTP/%{NUMBER:httpversion}" %{NUMBER:response:int} (?:-|%{NUMBER:bytes:int}) %{QS:referrer} %{QS:agent}' } } } output { stdout { codec => rubydebug } elasticsearch { host => ["192.168.10.100","192.168.10.101"] index => "apache_log" template => "/Users/liuxg/data/apache_template.json" template_name => "apache_elastic_example" template_overwrite => true } } ####daily.conf input { file { path => "/Users/liuxg/data/multi-pipeline/apache-daily-access.log" start_position => "beginning" sincedb_path => "/dev/null" type => "daily" } } filter { grok { match => { "message" => '%{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] "%{WORD:verb} %{DATA:request} HTTP/%{NUMBER:httpversion}" %{NUMBER:response:int} (?:-|%{NUMBER:bytes:int}) %{QS:referrer} %{QS:agent}' } } } output { stdout { codec => rubydebug } elasticsearch { host => ["192.168.10.100","192.168.10.101"] index => "apache_daily" template => "/Users/liuxg/data/apache_template.json" template_name => "apache_elastic_example" template_overwrite => true } } --- ####/etc/logstash/pipelines.yml(多管道) - pipeline.id: daily pipeline.workers: 1 pipeline.batch.size: 1 path.config: "/Users/liuxg/data/multi-pipeline/daily.conf" - pipeline.id: apache queue.type: persisted path.config: "/Users/liuxg/data/multi-pipeline/apache.conf" --- ##启动 bin/logstash -f logstash.conf #单配置文件启动 bin/logstash -f /etc/logstash/conf/ #应用目录下所有配置文件 nohup bin/logstash & #后台运行 ####注意:不加“-f”时,logstash会去读取/etc/logstash/conf.d/目录下的所有conf配置文件 ####注意:如果没有配置多pipelines,logstash会把配置目录下的所有配置文件汇总成一个配置文件。所有会出现,一个input会out多次

分享到: